Researchers from the USA and China have been able to operate speech recognition systems with frequencies that are inaudible to humans.

As the New York Times reports Siri, Alexa and Google Assistant are affected. The research team wanted to prove that it is possible to control the devices with instructions that are inaudible to humans and can therefore not be noticed, open the possibility to misuse them. After all, if you do not hear what the smartphone is responding to, how should you monitor it?

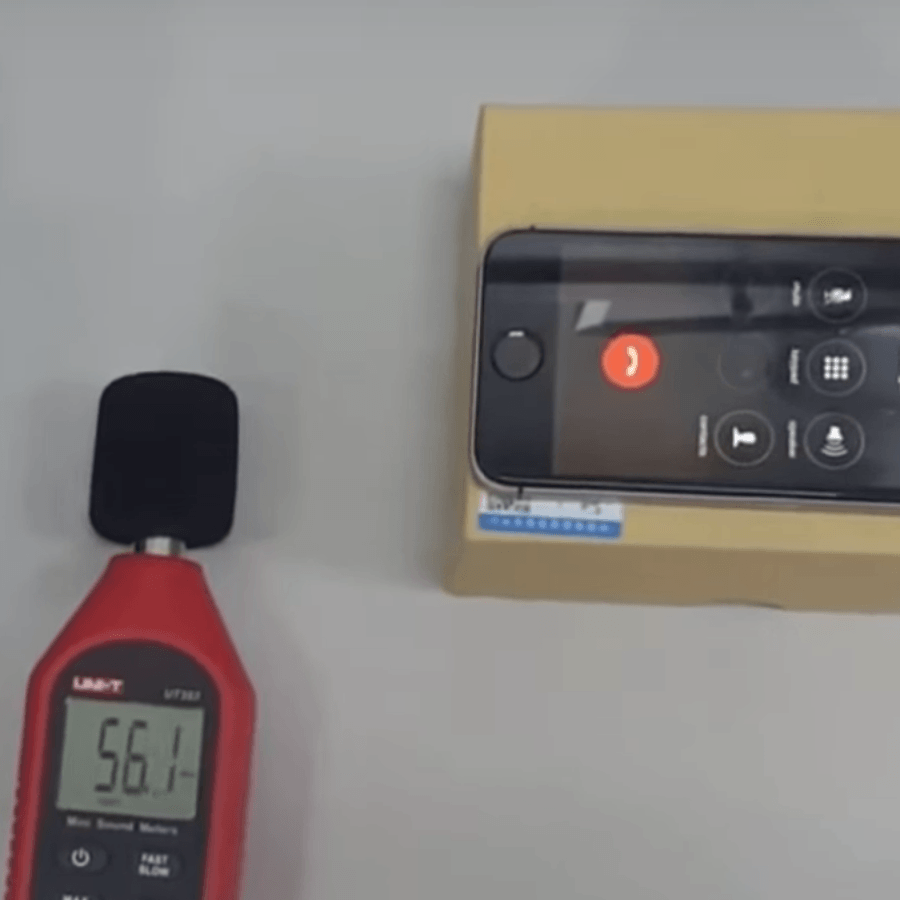

The research team managed to even place the corresponding commands in songs. The human hears only the song, but Siri would listen to the hidden frequencies, respond to it and call, for example, an expensive phone number.

https://www.youtube.com/21HjF4A3WE4

Apple seems to be at least somewhat aware of the problem, so it is necessary for example to unlock the iPhone or iPad when opening door locks by voice command so a bit of extra security is added here.

Siri is also affected but requires for security-related actions to unlock the iPhone.

Nevertheless, one can ask the question why all language assistants even react to frequencies inaudible to humans. In principle, it would be enough just to respond to human-generated sound – maybe this will be the behavior that will be standard with the next updates.

In any case, however, you should be aware that language assistants are currently seemingly easier to manipulate and abuse than other systems, as they are always on hold and are willing to execute a command without asking, for example, for a password for important actions.